Researchers at IBM in Zurich has managed to create accurate pictures of a molecule for the first time using non-contact atomic force microscopy (AFC).

The ability to image a molecule will help to open up atomic scale electronics, using molecules as switches an transistors.

“Though not an exact comparison, if you think about how a doctor uses an X-ray to image bones and organs inside the human body, we are using the atomic force microscope to image the atomic structures that are the backbones of individual molecules,” said IBM Researcher Gerhard Meyer. “Scanning probe techniques offer amazing potential for prototyping complex functional structures and for tailoring and studying their electronic and chemical properties on the atomic scale.”

The team’s current publication follows on the heels of another experiment published just two months ago in the June 12 issue of Science (Volume 324, Issue 5933, pp. 1428 – 1431) where IBM scientists measured the charge states of atoms using an AFM. These breakthroughs will open new possibilities for investigating how charge transmits through molecules or molecular networks. Understanding the charge distribution at the atomic scale is essential for building smaller, faster and more energy-efficient computing components than today’s processors and memory devices.

IBM Research – Zurich scientists Leo Gross, Fabian Mohn, Nikolaj Moll and Gerhard Meyer, in collaboration with Peter Liljeroth of Utrecht University, used an AFM operated in an ultrahigh vacuum and at very low temperatures (–268°C or – 451°F) to image the chemical structure of individual pentacene molecules. With their AFM, the IBM scientists, for the first time ever, were able to look through the electron cloud and see the atomic backbone of an individual molecule.

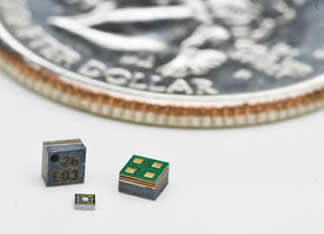

The AFM uses a sharp metal tip to measure the tiny forces between the tip and the sample, such as a molecule, to create an image. In the present experiments, the molecule investigated was pentacene. Pentacene is an oblong organic molecule consisting of 22 carbon atoms and 14 hydrogen atoms measuring 1.4 nanometers in length. The spacing between neighbouring carbon atoms is only 0.14 nanometers and in the experimental image, the hexagonal shapes of the five carbon rings as well as the carbon atoms in the molecule are clearly resolved. Even the positions of the hydrogen atoms of the molecule can be deduced from the image.

“The key to achieving atomic resolution was an atomically sharp and defined tip apex as well as the very high stability of the system,” recalls IBM scientist Leo Gross. “We prepared our tip by deliberately picking up single atoms and molecules and showed that it is the foremost tip atom or molecule that governs the contrast and resolution of our AFM measurements.”

A tip terminated with a carbon monoxide (CO) molecule yielded the optimum contrast at a tip height of approximately 0.5 nanometers above the molecule being imaged and—acting like a powerful magnifying glass—resolved the individual atoms within the pentacene molecule, revealing its exact atomic-scale chemical structure.

Furthermore, the scientists were able to derive a complete three-dimensional force map of the molecule investigated. “To obtain a complete force map the microscope needed to be highly stable, both mechanically and thermally, to ensure that both the tip of the AFM and the molecule remained unaltered during the more than 20 hours of data acquisition,” says Fabian Mohn, who is working on his PhD thesis at IBM Research – Zurich.

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=ebffc5d3-3259-40ae-a9bd-b767b977d8a8)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=d7f541fa-9f5d-4796-b334-7b71e03a21ee)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=976bd022-744b-4162-a5b4-20380ab0c95f)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=ea67089b-2ad1-4117-a86a-f7574e20b41d)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=8f16f104-42e0-4103-a4aa-1eb4c1654852)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=70563f14-fed2-419d-b118-eb0d2345fc2d)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=c4e51e1b-1d0d-4e24-ab20-dce666f99864)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=e9a19028-abf5-4a00-844f-32e1eda8d1df)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=5d9616ee-6554-4248-a102-e6674b31cba0)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=550d2e6c-eca4-41c4-9802-9b42045b9b20)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=34c7f464-531b-4e10-a39f-dab751c91b2c)